SOTA | State of the Art in Augmented Reality for Mobile Applications

Von Sebastian Zettl am 02.03.2025

Abstract

Augmented Reality (AR) is a tool that has evolved from an experimental technology to a mainstream tool, used by millions of people. This paper will look at Augmented Reality within the mobile space, by first taking a look at the key developments that made AR into what it is today. It will also examine different tracking approaches used in the mobile space, like sensor-based tracking and vision-based tracking. Concluding it will summarize the key points and identify future avenues for further research within the AR mobile space.

Introduction

Augmented Reality (AR) has become an important tool for mobile development in recent years, with Phones becoming more powerful and enabling the wider public to utilize Augmented Reality and apps like the Ikea Place app showcasing use cases for Augmented Reality in the e-commerce sector.

Zhou et al. (2008) defined that “Augmented Reality (AR) is a technology which allows computer generated virtual imagery to exactly overlay physical objects in real-time” (p.~193). AR “augments” or supplements our reality and Azuma (1997) wrote that AR systems have three distinct characteristics.

- It combines the real and virtual worlds.

- It is interactive in real-time.

- It should be registered in 3-D, meaning that the virtual elements are anchored in the real world, appearing as if they are part of it.

Augmented Reality is only part of a wider collective of immersive technology that alters our reality in some way. Tremosa (2024) highlights the differences between the different technologies. All the now-named terms and technologies fall under the name of Extended Reality (XR). AR is on one side of the spectrum, only overlaying digital elements onto the real world. Virtual Reality (VR) falls on the other side of the spectrum, transporting the user into a completely digital environment. Mixed Reality (MR) lies in the middle and combines both AR and VR. MR uses the real world and places digital elements into it, with which the user can then interact.

This paper will only focus on Augmented Reality, specifically focusing on AR when used with mobile devices. First, it will give a quick overlook at important developments of AR, then it will go over different tracking methods in the mobile space while also quickly examining some use cases in which Augmented Reality was used for mobile apps. Lastly, it will summarize the findings and give an outlook on future works.

Background

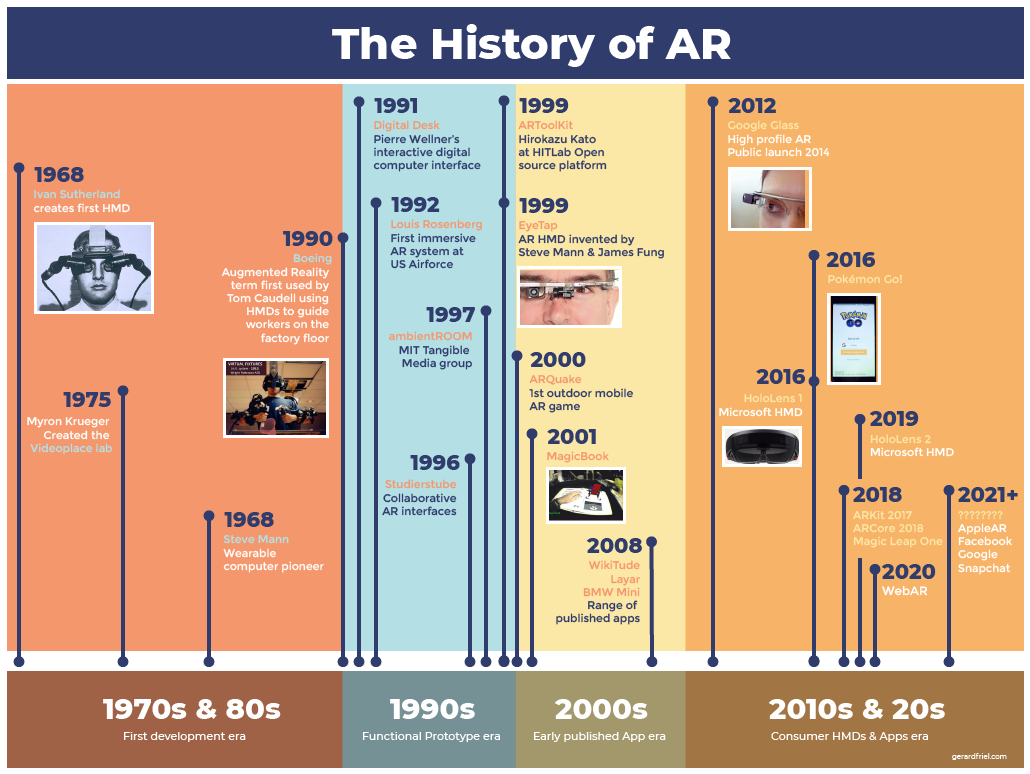

Augmented Reality goes back quite a while. Billinghurst et al. (2015) wrote up a well-documented section about the different developments pertaining to Augmented Reality throughout history. Fig. 1 also shows a timeline of the events that will be mentioned in this section.

The first computer-generated use of AR was in 1968 by Ivan Sutherland, who developed a head-mounted-display (HMD) called “The Sword of Damocles”. The HMD was capable of overlaying 3-dimensional graphics onto the real world while the user was wearing it.

Over time the technology was further researched and developed, especially in an industrial setting. For example, Caudell & Mizell (1992) explored the use of AR technology to help Boeing workers more easily create wire harnesses. Within the published work, Caudell first used the term “Augmented Reality” and is credited with coining the term.

What truly opened AR technology to the masses was in 1999 when the ARToolkit library which was released as open-source software, took care of tracking virtual objects in realization to the real world. It enabled developers to more easily create AR applications.

With the ARToolkit AR was also started being used to create games, for example, as mentioned by Piekarski & Thomas (2002) ARQuake. It was an extension of the game Quake, in which through the use of an HMD and a backpack with a computer in it, it was possible to play the game outside. The player was required to physically move around, with monsters and weapons appearing around them in the real world.

Another development was in 2009, when a variation of the ARToolkit, specifically for Adobe Flash called FLARToolkit, was released. This variation enabled the dedicated use of AR in a web browser.

An app that truly brought AR technology to the worldwide public and showcased its popularity when applied correctly, was the game Pokemon Go, released in 2016 by Niantic. Through the phone’s GPS and camera, Pokemon are overlaid onto the real world, allowing the users to catch them. It was so successful that it made $207 million within its first month and made around $1.03 billion dollars in its first year (Iqbal, 2024) of being released.

Two of the last important developments for AR technology, specifically in a mobile context, which will be named in this paper are the release of the ARKit in 2017 and the stable release of ARCore in 2018. Both are development Kits, with ARKit having been developed by Apple and being made for iOS devices and ARCore being developed by Google for Android devices (Nowacki & Woda, 2024).

Tracking Technology

One of the most important parts of AR technology is analyzing the real world, placing virtual objects within, and being able to track where they should stay. Qiao et al. (2019) wrote up a good summary of the different tracking methods in a mobile context. In total, there are distinct groups: sensor-based, vision-based, and hybrid-based (A combination of sensor and vision). This section will explain the different mechanisms and show their differences.

Sensor based Mechanisms

These are among the most lightweight tracking options on mobile devices. A Variety of different Sensors are already within a mobile phone, with AR applications making use of them. Gyroscopes, GPS, Compasses, and many more are used to track the progress of 3-D objects in the real world. While normally only a single sensor is used, the data of multiple sensors can be combined in order to increase the accuracy of the tracking. One problem with sensor-based tracking is, that errors in tracking can start to accumulate and correction in real-time is difficult. Syed et al. (2023) goes more in-depth with specific sensor-based tracking methods. For example, inertia tracking uses Gyroscopes, magnetometers, and accelerometers to find and evaluate the orientation and velocity of tracked objects.

An example of sensor-based tracking would be Pokemon Go. It uses location-based GPS tracking to overlay the Pokemon onto the real world, by tracking the carrier of the GPS signal. While the camera is also used, its only function is adding the real world as a background, not keeping track of 3-D Objects in relation to it

Vision-based Mechanisms

Vision-based tracking in relation to sensor-based tracking placed a lot more load on phones. It uses visual sensors, like the camera, in order to track the 3-D Objects. There are two different methods through which visual tracking is done. A marked-based and a marker-less method.

Marker-Based

The marker-based method is one of the earlier methods used for tracking and puts less of a load onto the phone. Also known as Fiducial Tracking, Syed et al. (2023) explains that it works by creating artificial landmarks. This could be a piece of paper, an LED, a QR code, etc. Through visual means, these markers can then be identified within the scene and allow 3-D Objects to be tracked.

Markerless

Syed et al. (2023) also explains that markerless vision-based tracking uses the data captured by the visual sensors to track. While this method puts a heavier load onto mobile phones, it is becoming increasingly popular, since phones are becoming more powerful, being able to better handle the load of this method. Generally, the sensors used to capture the information can be divided into three categories, namely visible light tracking, 3-D structure tracking, and infrared tracking. While all three of these can be found on mobile phones, only visible light tracking is available on all modern phones. 3-D structure tracking and infrared tracking are only available on more high-end or specialized phones.

Visible light tracking works, as explained by Klopschitz et al. (2010) using the visual data from the camera and mapping out the environment based on key visual pieces of information, like edges, corners, or other patterns.

An example using visual Augmented Reality tracking would be, as explained by Alves & Reis (2020) the Ikea Place app (Launch of New IKEA Place App – IKEA Global, 2017). The app allows users to take furniture from a virtual catalog and place it inside their house to see how it would look. The app utilized the Apple ARKit and later ARCore to allow the placing of furniture through a markerless method.

Hybrid Mechanisms

A hybrid tracking mechanism is, as the name suggests, a method that combines different tracking mechanisms together, mainly sensor-based ones and vision-based ones. Pinz et al. (2002) explains that it tries to combine the strengths, increasing accuracy or tracking speed while reducing the weaknesses, like jittering or heavy computing power of singular sensor usage.

One example of a hybrid tracking app would be Google Live View, released in 2019, as explained by Hall (2019). It is an extension to the base Google Maps, with which users can use their phone’s camera to get live directions and information from their surroundings. Through the camera, the souring is analyzed and things like 3-D signposts are shown to help users find their way. This is coupled with GPS sensors to further enhance the tracking and guidance of the app.

Developer Knowledge about Mechanisms

Knowing the different tracking mechanisms, how they work, and what their advantages and disadvantages are is helpful knowledge for developers. But if someone wants to work with AR tracking mechanisms, in depth knowledge is not needed. Frameworks and Toolkits, like ARCore or ARKit abstract a lot of the mechanisms that are needed in order to track AR objects.

Here for example is a function from ARCore. ARCore for example as the functionality of instant placement. Often times the phone needs to first scan the surroundings in order to establish a good tracking base, but with instant placement the 3D Objects can be placed within the real world and can immediately be tracked. Once the object has been placed, it gets its tracking refined within in real time (Place Objects Instantly | ARCore, 2024).

There is also a guide which details, how instant placement is implemented. In rough terms, a Session has to be created. Once it has been established the application listens for a tap of the user. Once the user taps, the placement point in the real world can be determined through a function call and an anchor for the object can be created. No more in depth knowledge about specific sensors or interpreting sensor data is needed (Instant Placement Developer Guide for Android | ARCore, 2024).

Conclusions

Since its development started, Augmented Reality has made leaps and bounds from where it started. From a Head-mounted display to having the ability to use AR with mobile devices, Augmented Reality has become an important tool that should be used and is used by millions of people worldwide. With dedicated libraries like ARKit or ARCore, it has become increasingly easy for developers to start creating their own AR applications. Taking advantage of different tracking methods, through the use of sensors like the GPS or different inertia trackers and vision-based ones, like the camera, there are a multitude of ways to overlay digital elements onto the real world. Different apps have already integrated AR or have been build from the ground up with it in mind with great succes. Pokemon Go mostly overlays Pokemon onto a map and the real world through the use of GPS to IKEA Place allowing users to decorate their home with digital furniture being placed through the use of the user’s camera seeing in real time how their home could be decorated. The future will only see an increase in these apps, as AR continues to evolve and technology becomes more powerful, allowing users and developers to take full advantage of AR.

Future works could focus on many different parts of Augmented Reality. One could be to take a more detailed look into how the tacking and the sensors work, looking into how they can interact with each other and research improvements to this technology. Another avenue could be looking into integrating AR into different app concepts to see if it would enhance the user experience or develop something completely new, for example integrating Artificial intelligence to enhance Augmented Reality. Trying to do this within this paper would have exceeded its limits.

Referenzen

Alves, C., & Reis, J. (2020). The intention to use e-commerce using augmented reality – The case of IKEA Place. In Proceedings of the Conference on E-Commerce and Digital Marketing (pp. 114–123). Springer. https://doi.org/10.1007/978-3-030-40690-5_12

Azuma, R. T. (1997). A survey of augmented reality. Presence: Teleoperators and Virtual Environments, 6(4), 355–385. https://doi.org/10.1162/pres.1997.6.4.355

Billinghurst, M., Clark, A., & Lee, G. (2015). A survey of augmented reality. University of Canterbury. http://hdl.handle.net/10092/15494

Caudell, T., & Mizell, D. (1992). Augmented reality: An application of heads-up display technology to manual manufacturing processes. Proceedings of the Twenty-Fifth Hawaii International Conference on System Sciences, 2, 659–669. https://doi.org/10.1109/HICSS.1992.183317

Friel, G. (2020, November 28). The history of AR. Gerard Friel. https://www.gerardfriel.com/ar/the-history-of-ar/

Hall, C. (2019, May 7). What is Google Maps AR navigation and Live View and how do you use it? Pocket-Lint. https://www.pocket-lint.com/what-is-google-maps-ar-navigation-live-view/

Google. (2024, October 31). Instant placement developer guide for Android | ARCore. Google Developers. https://developers.google.com/ar/develop/java/instant-placement/developer-guide

Iqbal, M. (2024, January 10). Pokémon Go revenue and usage statistics (2024). Business of Apps. https://www.businessofapps.com/data/pokemon-go-statistics/

Klopschitz, M., Schall, G., Schmalstieg, D., & Reitmayr, G. (2010). Visual tracking for augmented reality. 2010 International Conference on Indoor Positioning and Indoor Navigation, 1–4. https://doi.org/10.1109/IPIN.2010.5648274

IKEA. (2017, September 12). Launch of new IKEA Place app – IKEA Global. IKEA.

Nowacki, P., & Woda, M. (2024). Capabilities of ARCore and ARKit platforms for AR/VR applications. ResearchGate. https://doi.org/10.1007/978-3-030-19501-4_36

Piekarski, W., & Thomas, B. (2002). ARQuake: The outdoor augmented reality gaming system. Communications of the ACM, 45(1), 36–38. https://doi.org/10.1145/502269.502291

Pinz, A., Brandner, M., Ganster, H., Kusej, A., Lang, P., & Ribo, M. (2002, April). Hybrid tracking for augmented reality. ResearchGate. https://www.researchgate.net/publication/229025765_Hybrid_tracking_for_augmented_reality

Google. (2024, December 12). Place objects instantly | ARCore. Google Developers. https://developers.google.com/ar/develop/instant-placement

Qiao, X., Ren, P., Dustdar, S., Liu, L., Ma, H., & Chen, J. (2019). Web AR: A promising future for mobile augmented reality—State of the art, challenges, and insights. Proceedings of the IEEE, 107(4), 651–666. https://doi.org/10.1109/JPROC.2019.2895105

Syed, T. A., Siddiqui, M. S., Abdullah, H. B., Jan, S., Namoun, A., Alzahrani, A., Nadeem, A., & Alkhodre, A. B. (2023). In-depth review of augmented reality: Tracking technologies, development tools, AR displays, collaborative AR, and security concerns. Sensors, 23(1), 146. https://doi.org/10.3390/s23010146

Tremosa, L. (2024, November 30). Beyond AR vs. VR: What is the difference between AR vs. MR vs. VR vs. XR? Interaction Design Foundation. https://www.interaction-design.org/literature/article/beyond-ar-vs-vr-what-is-the-difference-between-ar-vs-mr-vs-vr-vs-xr

Zhou, F., Duh, H. B.-L., & Billinghurst, M. (2008). Trends in augmented reality tracking, interaction and display: A review of ten years of ISMAR. 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, 193–202. https://doi.org/10.1109/ISMAR.2008.4637362

The comments are closed.